Artificial Intelligence (AI), a branch of computer science, aims to create machines that simulate human intelligence. The concept, evolving since the 1950s, covers various applications like expert systems, natural language processing, and machine vision. John McCarthy, a pivotal figure in AI, described it as the science of making intelligent machines and programs. AI’s history is marked by Alan Turing’s 1950 paper, which introduced the “Turing Test” to assess a machine’s ability to exhibit human-like intelligence.

AI encompasses fields like machine learning and deep learning, focusing on algorithms that enable systems to make data-driven predictions or decisions. Notable developments include OpenAI’s ChatGPT, illustrating a shift in AI’s focus from computer vision to advanced natural language processing, extending to understanding diverse data types.

As AI technology grows, it’s crucial to address ethical considerations, especially in business applications. AI’s scope ranges from mimicking human thinking and actions to rational thinking and actions. Despite technological advancements, no AI system has yet achieved the full range of human cognitive abilities. However, some AI systems have attained expert-level performance in specific tasks. Intelligence in AI is distinct from natural instinct, as seen in the contrasting behaviors of humans and certain insects. AI continues to be a dynamic and ethically significant field, pushing the boundaries of machine capability and intelligence.

Who Created the AI?

The genesis of Artificial Intelligence (AI) is largely attributed to British polymath Alan Turing. In the mid-20th century, Turing pioneered the field with his work on computing and machine intelligence. His 1950 paper, “Computing Machinery and Intelligence,” laid the conceptual foundation for AI and introduced the Turing Test to evaluate a machine’s intelligence. Turing’s exploration into machines mimicking human reasoning and learning was groundbreaking. He conceptualized the universal Turing machine, a precursor to modern computers, and during World War II, contemplated how machines could learn from experience and solve problems heuristically. Although other scientists like John McCarthy and Christopher Strachey made significant contributions, with McCarthy coining the term “artificial intelligence” and Strachey creating the first successful AI program in 1951, it was Turing’s early work and ideas that primarily ignited the AI field.

History of Artificial Intelligence

The history of Artificial Intelligence (AI) has evolved significantly from its inception to the present day. Initially conceptualized in myths and later explored by philosophers, AI’s journey began in earnest with the development of programmable computers in the 1940s. Key figures like Alan Turing pioneered early research, leading to the formal establishment of AI as a field at the 1956 Dartmouth workshop. The initial optimism, marked by significant advancements such as Turing’s Imitation Game and Newell and Simon’s General Problem Solver, faced setbacks due to overambitious expectations and computational limitations. This led to periods known as ‘AI winters,’ where funding and interest dwindled. However, the 1980s saw a resurgence in AI, driven by advancements in algorithms and increased investment.

The introduction of deep learning techniques and expert systems further propelled AI, though it faced another setback by the end of the 1980s. The 1990s and 2000s marked a period of significant achievements, including IBM‘s Deep Blue defeating chess champion Gary Kasparov and advancements in natural language processing. In recent years, the field has seen a new boom, driven by massive data availability and enhanced computational power, leading to groundbreaking applications in various sectors. AI’s journey reflects a continuous interplay of technological innovation, fluctuating funding, and evolving research objectives.

How Does AI Work?

AI, a transformative force in technology, operates by analyzing extensive data to identify patterns and correlations, enabling machines to make informed predictions. This process involves various cognitive skills, including learning, reasoning, self-correction, and creativity. AI programming is not limited to a single language, with Python, R, Java, C++, and Julia being popular choices among developers. The technology has evolved significantly since Alan Turing’s pioneering work in the 1950s, leading to applications like chatbots and image recognition tools.

Modern AI advancements, particularly in deep learning, have enabled more sophisticated tasks like generating realistic media. AI’s effectiveness hinges on its ability to adapt and improve through continuous learning and self-correction, making it an invaluable tool across various industries. The process of building an AI system involves several steps, including data collection, preprocessing, model selection, training, and optimization, culminating in deployment and continuous learning to ensure accuracy and relevance.

What are the Examples of Artificial Intelligence?

Artificial Intelligence (AI) seamlessly integrates into our daily lives, offering advanced solutions across various fields. Key examples include:

Maps and Navigation: AI enhances travel experiences with optimal routing and traffic management through machine learning, providing real-time updates and suggestions.

Facial Detection and Recognition: Utilized in unlocking phones and for security in public spaces, AI can identify and distinguish individual faces.

Text Editors and Autocorrect: AI-driven tools like Grammarly employ machine learning and natural language processing for language correction and suggestions.

Search and Recommendation Algorithms: AI curates personalized content and product recommendations based on user behavior and preferences, enhancing online experiences.

Chatbots: AI powers customer service chatbots for efficient query resolution and order tracking, learning from interactions to improve responses.

Digital Assistants: Virtual assistants like Siri and Alexa use AI to execute tasks based on voice commands, applying natural language processing and machine learning.

Social Media: AI monitors content, suggests connections, and targets ads, enhancing user engagement and personalizing experiences.

E-Payments: AI in banking simplifies transactions and enhances security, detecting potential fraud by analyzing spending patterns.

Other notable AI applications include healthcare diagnostics, gaming advancements like self-learning algorithms, smart home devices for automated control, and e-commerce platforms that tailor user experiences. AI’s role in space exploration, through processing vast data for discoveries, showcases its potential in advancing human knowledge. These examples reflect AI’s transformative impact, transcending human capabilities to deliver efficient, personalized solutions across diverse sectors.

Artificial Intelligence Applications

AI revolutionizes e-commerce through personalized shopping experiences and fraud prevention. In education, it streamlines administrative tasks and enhances learning with smart content and personalized approaches. AI’s influence on lifestyle includes autonomous vehicles, spam filters, and facial recognition, enhancing daily convenience and security. In navigation and robotics, AI optimizes route planning and robotic agility. AI applications in healthcare include disease detection and drug discovery, while in agriculture, it aids in crop management and soil analysis. Additionally, AI plays crucial roles in gaming, space exploration, autonomous vehicles, and social media, providing personalized experiences and enhancing safety and efficiency.

What are 10 Ways AI is Used Today?

AI technology is deeply integrated into our daily lives, enhancing convenience and efficiency across various domains. Here’s a summary of 10 significant ways AI is used today:

- Personal Assistants: AI-driven assistants like Amazon Alexa, Google Home, and Siri offer interactive assistance, managing tasks from setting reminders to controlling smart home devices.

- Navigation and Traffic Management: AI in apps like Google Maps and Waze optimizes routes based on real-time traffic data, offering quicker, more efficient travel.

- Social Media Optimization: Platforms like Facebook and Instagram use AI for personalized feeds and content moderation, including spam and fake news detection.

- Healthcare Advances: AI aids in early disease detection through diagnostic tools and health monitoring via devices like Fitbit and Apple Watch.

- Banking and Finance: AI enhances fraud detection, credit scoring, and personalized banking assistance through chatbots.

- Enhanced Shopping Experience: E-commerce giants like Amazon personalize shopping experiences using AI to analyze browsing and purchase patterns.

- Entertainment Personalization: Streaming services like Netflix and Spotify use AI for personalized recommendations based on viewing and listening habits.

- Transportation Innovation: Self-driving vehicles and traffic management systems employ AI for safer, more efficient travel.

- Image Recognition: AI technologies in facial recognition and object detection are used in various applications, including security and medical diagnosis.

- Educational Tools: AI-driven platforms like Duolingo offer personalized learning experiences, adapting to individual learning styles and progress.

In addition to these, AI powers facial recognition for device security, provides real-time language processing in communication, enhances search engine accuracy, and drives innovations in gaming and space exploration. AI’s role is also critical in smart home devices, predictive banking services, and even in entertainment choices, making everyday tasks more intuitive and user-friendly.

Is AI Good or Bad for Society?

Artificial Intelligence (AI) offers a blend of benefits and challenges, impacting society in diverse ways. Its integration into daily life, from personal assistants like Alexa to AI-driven investment tools, demonstrates its expansive influence. AI’s ability to analyze extensive data sets is invaluable in various sectors, including banking for fraud detection and healthcare for improved diagnoses. These advancements, however, come with potential risks. Experts like Stephen Hawking have expressed concerns about self-developing AI systems, warning of possible negative outcomes if AI surpasses human intelligence.

The risks of AI misuse, such as in cybercrime, highlight the need for careful regulation and ethical deployment. Despite potential drawbacks, AI’s positive contributions to accessibility, quality of life, and decision-making are significant. Its role in personalized marketing, smart home devices, and even navigation exemplifies its utility. However, the reliance on AI also raises questions about reduced human interaction and critical thinking skills. Future applications in various fields, including healthcare and entertainment, indicate AI’s staying power and evolving role in society. Balancing AI’s benefits and risks is crucial for harnessing its potential while safeguarding against its pitfalls. The overall sentiment suggests AI’s positive impact on society, provided its development and application are responsibly managed.

Why do People Fear AI?

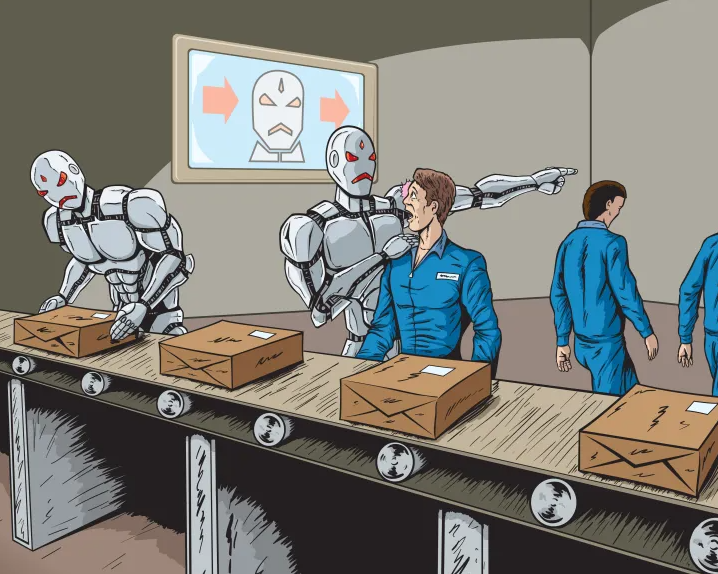

The pervasive fear of Artificial Intelligence (AI) stems from a variety of concerns, magnified by science fiction and media. One major worry is the potential for job loss due to AI automation. Studies and experts, like those from Oxford and Goldman Sachs, predict significant impacts on the job market, with a potential rise in unemployment in various sectors. This fear is fueled by historical transitions, where technological advancements have led to shifts in the workforce.

Another concern is the loss of control and the emergence of self-planning, autonomous AI systems. High-profile figures like Elon Musk and research from institutes like The Future of Life Institute and Penn have highlighted the risks of AI evolving beyond human control, potentially leading to negative consequences for humanity.

There’s also anxiety about AI in warfare, with the development of autonomous weapons, and the risk of AI-enhanced cyber warfare. This is coupled with fears of AI-induced income inequality and societal unrest, as well as potential bias in AI systems leading to unfair and discriminatory outcomes.

The potential for AI to affect human creativity and originality also raises concerns. As AI demonstrates capabilities in generating creative content, professionals in creative fields worry about the future of their careers and the uniqueness of human-generated art and ideas.

Despite these fears, it is important to recognize the positive potential of AI in various fields, from healthcare to education. While AI anxiety is understandable, balancing these fears with the benefits and responsible management of AI technology is essential for its ethical and beneficial integration into society.

Why do We Need AI?

Artificial Intelligence (AI) is essential today due to its unparalleled ability to process, interpret, and make decisions from vast amounts of data, far beyond human capacity. Originating in the 1950s, AI’s significance has escalated with advancements in cloud processing and computing power. It enables organizations to unlock potential from previously untapped data resources, leading to more informed decision-making.

AI’s impact is particularly notable in business innovation, offering automation that enhances consistency, speed, and scalability, with some companies reporting time savings of 70%. Beyond efficiency, AI drives business growth, with successful scalers achieving three times the return on AI investments compared to those in the pilot phase. This technology’s adaptability through machine learning and deep learning allows businesses to stay competitive in rapidly changing environments, turning AI into a strategic necessity.

In everyday life, AI’s influence is growing, from self-driving cars to facial recognition, improving efficiency and productivity. It automates tasks like data entry and customer service and assists in complex problem-solving across various industries. AI’s learning and predictive capabilities, exemplified by technologies like machine learning, play a crucial role in advancement.

AI also holds the potential to enhance human interactions, such as recognizing and responding to emotions, and to boost efficiency through data analysis. The technology is divided into four categories, each contributing uniquely to its widespread applicability. In summary, AI is indispensable for its ability to automate routine work, increase productivity, drive business growth, and assist in complex decision-making, making it a cornerstone of modern technological advancement.

How Artificial Intelligence Helps in Our Daily Life?

Artificial Intelligence (AI) is reshaping our world with remarkable benefits, ranging from reducing human errors to taking on risky tasks. AI algorithms are capable of precise decision-making based on vast data and error-minimizing algorithms, exemplified in robotic surgeries that enhance patient safety. AI robots can undertake hazardous tasks, like defusing bombs or deep-sea exploration, thanks to their durable and resilient nature. Their 24/7 availability boosts productivity, as seen in AI-driven customer support chatbots that offer round-the-clock assistance. AI’s digital assistance reduces the need for human interaction, as observed in sophisticated chatbots that are indistinguishable from human conversations. It’s instrumental in pioneering innovations like self-driving cars, enhancing road safety, and accessibility.

AI also brings unbiased decision-making, evident in AI-powered recruitment systems that mitigate hiring biases. It excels in performing repetitive tasks with high accuracy, as seen in robots on manufacturing assembly lines. Daily applications of AI, like Google Maps or virtual assistants, make our daily lives more efficient. In high-risk situations, AI can intervene where human safety is at risk, demonstrated by its use in disaster management. In medicine, AI contributes significantly, from early disease detection to personalized treatment plans.

AI’s automation capabilities extend to various domains, including recruitment, where it can streamline the hiring process. It’s crucial in decision-making, as seen in AI-driven CRM tools that help businesses strategize effectively. AI’s ability to solve complex problems is evident in applications like fraud detection in finance. Furthermore, AI is a key economic driver, predicted to contribute significantly to global GDP growth.

The Role of Artificial Intelligence in Future Technology

Artificial Intelligence (AI) is rapidly transforming future technology across various industries, enhancing efficiency, productivity, decision-making, customer experience, healthcare, transportation, cybersecurity, manufacturing, financial services, and even education and media. AI systems analyze vast data sets, enabling streamlined processes, automation of repetitive tasks, and optimized workflows. They assist in predictive modeling and real-time analysis, providing valuable insights for informed business decisions.

In healthcare, AI contributes to accurate diagnoses, personalized treatments, and patient monitoring. Transportation is revolutionized with autonomous vehicles, using deep learning for safe, efficient driving. AI strengthens cybersecurity by detecting threats and adapting to new challenges. Manufacturing processes are improved with AI-driven robotics, achieving higher production rates and quality control. Financial services leverage AI for analytics and personalized advice. Education and media sectors are adapting AI for personalized learning and content creation. Ethical considerations are crucial as AI advances, ensuring responsible development and use. AI’s role in future technology is pivotal, promising significant advancements and reshaping industries with its potential for innovation and problem-solving.