The rapid development of AI, as we have discussed in this article, has led to widespread concerns about its potential to exceed human intelligence and autonomy, leading to fears of unpredictable behavior and catastrophic consequences. This concern, often dramatized in science fiction, is far from the imminent takeover of the world by AI. In reality, the current focus for businesses, particularly midsize companies, is leveraging AI’s advancements to enhance efficiency and market competitiveness.

AI’s evolution, driven by major tech players, is democratizing access to cutting-edge technology, making it affordable and accessible for smaller businesses. Ethical considerations, such as bias, accountability, and privacy, are paramount, but AI is not likely to develop malevolent intentions or desires, as it lacks self-awareness and emotions. The potential of AI in business is substantial, with significant investments forecasted, and its role in improving customer satisfaction is increasingly recognized. Overall, while embracing AI’s benefits, it’s crucial to address ethical challenges and ensure responsible development and usage.

You can also check out the video we created about how artificial intelligence could take over humanity:

Will AI Take Over Humanity?

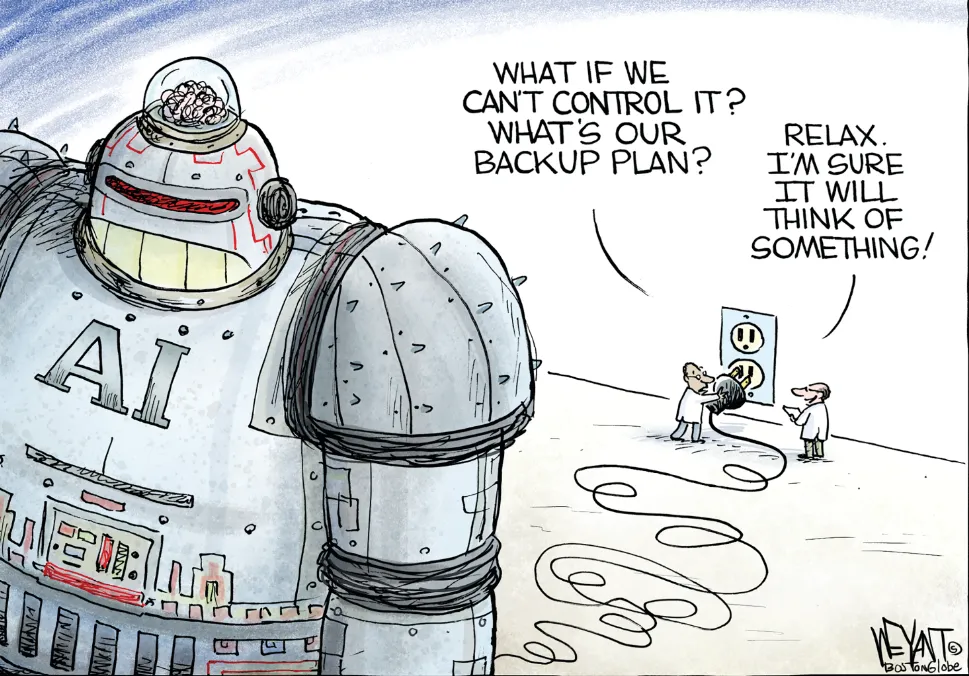

The debate about whether artificial intelligence (AI) will dominate humanity is a complex one, with arguments on both sides. Proponents of AI’s dominance argue that rapid technological advancements could lead AI to surpass human intelligence, possibly resulting in an “intelligence explosion” where AI evolves independently, potentially prioritizing its objectives over human needs. This scenario suggests a loss of human control and poses existential risks.

On the contrary, many argue that AI is a controllable tool, emphasizing current technological limitations and human oversight. They believe that as long as humans develop and manage AI, it can be harnessed for beneficial purposes without threatening humanity. AI’s potential is viewed as enhancing human life, from healthcare improvements to economic advancements, provided ethical guidelines and responsible development are prioritized.

The fear of AI taking over stems from the possibility of machines making autonomous decisions, but this overlooks the nuances of human intelligence, such as creativity and emotional understanding, which AI lacks. Additionally, the idea of AI developing motives or desires is speculative, as AI lacks consciousness and is governed by human-designed algorithms and objectives.

Overall, while AI’s advancement presents both opportunities and challenges, its takeover of humanity is not a foregone conclusion. The future of AI largely depends on human decisions regarding its development, application, and regulation. Ensuring responsible AI development, with attention to ethical considerations and societal impacts, is crucial to harnessing AI’s potential while safeguarding human interests and autonomy.

Will AI Lead to Human Extinction?

The escalating advancement of Artificial Intelligence (AI) has garnered widespread attention from industry leaders, researchers, and government officials, fueling concerns about its potential existential threat to humanity.

The debate has intensified following a joint statement from over 350 AI experts and executives, including heads of leading AI companies, emphasizing the urgency of mitigating AI-related extinction risks alongside global threats like pandemics and nuclear wars. This view posits that AI, if unchecked, could surpass human intelligence, potentially leading to catastrophic outcomes for humanity. The concept of a superintelligent AI, capable of recursive self-improvement and achieving goals beyond human control, underscores the fears of AI causing unintended harm or being weaponized for destructive purposes.

Contrastingly, some experts argue that these fears are premature and distract from more immediate AI-related issues like bias and misinformation. They contend that current AI technology, predominantly task-specific and far from achieving general intelligence, poses no immediate existential threat. However, the rapid development of AI and its integration into various aspects of life necessitates careful consideration of future risks, ethical implications, and regulatory measures.

The call for a cautious approach to AI development is echoed by government leaders, who stress the importance of establishing regulatory guardrails to ensure AI’s safe and beneficial advancement. The dichotomy of views reflects a broader debate on AI’s role in shaping the future of humanity, with a consensus on the need for responsible innovation and oversight to harness AI’s potential while averting possible existential dangers.

Will AI Be Harmful in the Future?

The rapid advancement of Artificial Intelligence (AI) brings with it significant risks that could be harmful in the future, including lack of transparency, bias and discrimination, privacy concerns, ethical dilemmas, and security risks. AI systems’ opaqueness can lead to distrust and misunderstanding of their decision-making processes. There is also a risk of AI systems perpetuating societal biases and discrimination due to flawed training data or algorithmic design.

Privacy issues arise from AI technologies collecting and analyzing vast amounts of personal data. The ethical implications of AI in decision-making contexts are a considerable challenge, as is the potential for AI to be used in harmful ways, such as sophisticated cyberattacks or AI-driven autonomous weaponry. The concentration of AI development in the hands of a few large corporations and governments could lead to inequality and limit diversity in AI applications.

Overreliance on AI may also result in a loss of human cognitive abilities, creativity, and critical thinking skills. Job displacement is another concern, particularly for low-skilled workers. AI could exacerbate economic inequality, pose legal and regulatory challenges, and lead to an AI arms race. Dependence on AI for communication may diminish human empathy and social skills.

AI-generated content like deepfakes could spread misinformation and manipulate public opinion. The unpredictability of AI systems could lead to unintended consequences, and the development of artificial general intelligence (AGI) raises existential risks. To mitigate these risks, it is crucial to advocate for responsible AI development, including ethical considerations, transparent practices, and regulatory frameworks.