NEURA Robotics Expands Cognitive Robot Lineup and Opens Neuraverse Platform

NEURA Robotics debuts new robots and an open platform at Automatica 2025. The 4NE1 humanoid and MiPA robot target both…

Writer Attracts Major Clients and Investment With Cost-Efficient AI Agents

Writer combines proprietary AI models and synthetic data to lower costs for enterprises. Major corporations use Writer to increase efficiency…

Huawei Pushes AI Agents in HarmonyOS 6 to Rival Android and iOS

Huawei integrates AI agents at the core of HarmonyOS 6's design. Developers access advanced automation without complex model training. HarmonyOS…

Robotics Startups Secure Spotlight at U.S. Trade Shows

Robotics trade shows in U.S. highlighted both industrial and defense innovations. AI-driven startups like Cambrian Robotics and Kinisi drew industry…

Hexagon Introduces AEON Humanoid to Tackle Labor Shortage

Hexagon launches AEON humanoid to support various industrial sectors. AEON combines sensor, AI, and battery technologies in collaborative pilots. Partnerships…

Black-I Robotics Secures Victory in Chewy Picking Robot Contest

Black-I Robotics won the Chewy CHAMP Challenge with a full-stack solution. Breezey Machine Company earned second place for modular gripping…

Ex-OpenAI Staff Challenge Leadership on AI Safety and Profit Focus

Former OpenAI staff allege profit focus is overriding safety commitments. They urge restoring nonprofit control and stronger oversight mechanisms. Leadership’s…

Apple Integrates AI to Advance In-House Chip Design

Apple pursues generative AI to streamline chip design for its devices. Collaborations with Broadcom, Synopsys, and Cadence support new infrastructure.…

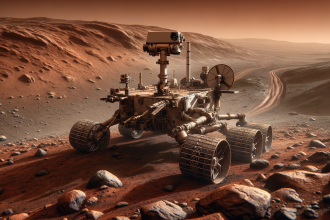

NASA’s Robotics Lead Shares Insights on Space and Industry Progress

NASA robotics projects span space, industry, and human support applications. Education and proactive strategies stand out in Dr. Ambrose’s approach.…

Businesses Accelerate AI Integration While Tackling Deployment Hurdles

Businesses increase AI investments and appoint dedicated leadership roles. Major hurdles persist in data quality, model training, and integration. Cloud…