NVIDIA Corporation has introduced a new set of microservices, named NVIDIA Omniverse Cloud Sensor RTX, designed to simulate sensors with high physical accuracy. This development aims to expedite the advancement of autonomous machines across various industries. The announcement comes as NVIDIA researchers showcase 50 projects centered on visual generative AI at the CVPR conference in Seattle.

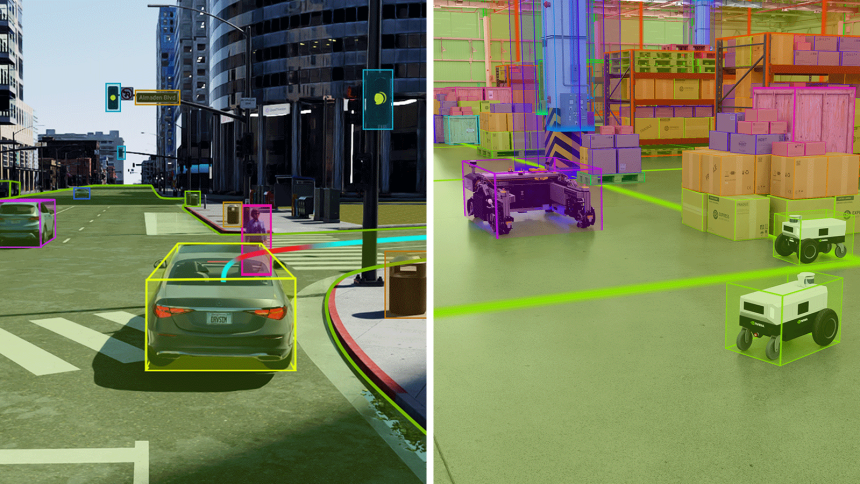

NVIDIA Omniverse Cloud Sensor RTX, launched by NVIDIA, is a suite of microservices that facilitates realistic sensor simulation for the development of autonomous machines. This product, announced at the CVPR conference in Seattle, combines real-world data from various sensors with synthetic data to create accurate virtual environments for testing AI software before real-world deployment. The service will be available later this year.

Support for Large-Scale Simulation

Built on the OpenUSD framework and powered by NVIDIA RTX ray-tracing and neural-rendering technologies, Omniverse Cloud Sensor RTX integrates data from videos, cameras, radar, and lidar. The system includes APIs to speed up the development of autonomous machines across various industries. Even in scenarios with limited real-world data, the microservices can simulate numerous activities, enhancing the safety and efficiency of AI deployments.

Omniverse Cloud Sensor RTX will be accessible to developers focusing on autonomous vehicles, such as CARLA, Foretellix, and MathWorks, allowing them to validate and integrate digital twins of their systems in virtual environments. This reduces the need for physical prototyping, saving time and resources. NVIDIA’s announcement coincides with their first-place win at the Autonomous Grand Challenge for End-to-End Driving at Scale at the CVPR conference.

Innovations in AI Research

NVIDIA’s researchers are also presenting two finalist papers for the Best Paper Awards at CVPR. One paper focuses on the training dynamics of diffusion models, while the other examines high-definition maps for autonomous vehicles. The winning workflow for the End-to-End Driving at Scale track, which can be replicated using Omniverse Cloud Sensor RTX, outperformed more than 450 global entries. This demonstrates the efficacy of generative AI in developing comprehensive self-driving models.

FoundationPose, another model showcased by NVIDIA, simplifies object pose estimation and tracking in 3D environments. This model uses minimal reference images or 3D representations to understand and track objects in complex scenes, which could be beneficial for industrial robots and augmented reality applications. Additionally, NeRFDeformer allows developers to transform existing neural radiance fields using a single RGB-D image, simplifying the creation of 3D scenes from 2D images.

Previous news reports on NVIDIA’s advancements in AI have highlighted the company’s continuous efforts in synthetic data generation and sensor simulation. Earlier implementations, such as Drive Constellation, have focused on autonomous vehicle testing through cloud-based simulation. In contrast, Omniverse Cloud Sensor RTX expands these capabilities to a broader range of autonomous machines and industrial applications, showcasing more comprehensive and versatile solutions.

Comparatively, the earlier iterations of NVIDIA’s simulation platforms primarily targeted specific domains like automotive. The current Omniverse Cloud Sensor RTX broadens this scope, incorporating enhanced capabilities thanks to the integration with OpenUSD and RTX technologies. This evolution signals a shift towards more holistic and scalable solutions, addressing the diverse needs of industries ranging from robotics to smart cities.

Conclusion

NVIDIA’s introduction of Omniverse Cloud Sensor RTX signifies a notable progression in the field of autonomous machine development. By providing a platform that combines real-world and synthetic data, NVIDIA aims to enhance the safety, efficiency, and cost-effectiveness of AI deployment across various industries. The integration of advanced simulation technologies like OpenUSD and RTX ray-tracing offers developers robust tools to create and test AI applications in realistic virtual environments. This expansion from previous, more domain-specific solutions highlights NVIDIA’s commitment to fostering innovation in the autonomous systems sector.