The ethical issues in artificial intelligence (AI) are varied and complex, encompassing concerns about bias and discrimination, transparency and accountability, creativity and ownership, social manipulation and misinformation, privacy and security, job displacement, and autonomous weapons. AI algorithms, trained on extensive data, can inadvertently perpetuate societal biases, affecting decisions in critical areas like employment and justice. AI’s “black box” nature often obscures how decisions are reached, raising questions about accountability, especially when errors occur.

Ownership of AI-generated content remains unclear, creating legal ambiguities. AI’s potential for spreading misinformation and manipulating public opinion is alarming, as seen in deepfakes. Privacy concerns escalate with AI’s reliance on vast personal data, while job displacement due to automation poses economic and social challenges. Ethical dilemmas also arise with AI-powered autonomous weapons, necessitating international regulations. Addressing these issues requires multi-faceted collaboration, clear regulations, and a commitment to ethical AI development and deployment.

Can AI be Ethical and Moral?

Artificial Intelligence (AI) is significantly shaping decision-making in governance and other sectors, as we have discussed in this article, and countries are increasingly introducing AI regulations. AI tools analyze complex patterns and forecast future scenarios, aiding informed decisions. However, challenges arise from inherent biases in AI, reflecting both data and developer biases, leading to potential skewed outcomes in governance applications. Addressing these biases and focusing on AI ethics, this article explores whether AI can be ethical and moral.

Initiatives like AI Jesus, based on GPT-4 and PlayHT, demonstrate efforts to instill human values in AI systems. AI Jesus, designed to align with biblical values, handles user interactions positively and ethically. This example indicates the feasibility of imparting moral judgment in AI, using insights from cognitive science, psychology, and moral philosophy. Computer scientists are working on making autonomous systems and algorithms reflect human ethical values. Methods like supervised fine-tuning and secondary models adjust AI responses and likelihoods, promoting specific values or moral stances.

Despite these advances, defining ‘ethical AI’ remains complex, varying across societies and cultures. Some propose a ‘moral parliament’ approach, representing multiple ethical views in AI systems. Projects like Delphi and Moral Machine engage public input on ethical dilemmas, guiding AI’s moral compass. The integration of human cognitive biases and ethical principles into AI algorithms, along with collaborative efforts between computer science and neuroscience, may offer a balanced approach to ethical AI development.

Do We Need Ethics in AI?

Artificial Intelligence (AI) is increasingly integrated into various aspects of our lives, making its ethical considerations paramount. AI’s potential to replicate or augment human intelligence brings challenges paralleling human judgement issues. AI projects based on biased data can harm marginalized groups, and hastily built algorithms risk ingraining these biases, emphasizing the need for an ethical code in AI development.

Films like “Her” and real-life applications like Lensa AI and ChatGPT highlight AI’s influence on human experiences and the ethical dilemmas they pose, from appropriating art to aiding in tasks like essay writing or coding contests. As AI pervades sectors like healthcare, the urgency of addressing ethical concerns grows. Questions arise about ensuring unbiased AI and mitigating future risks, requiring responsible and collaborative stakeholder action.

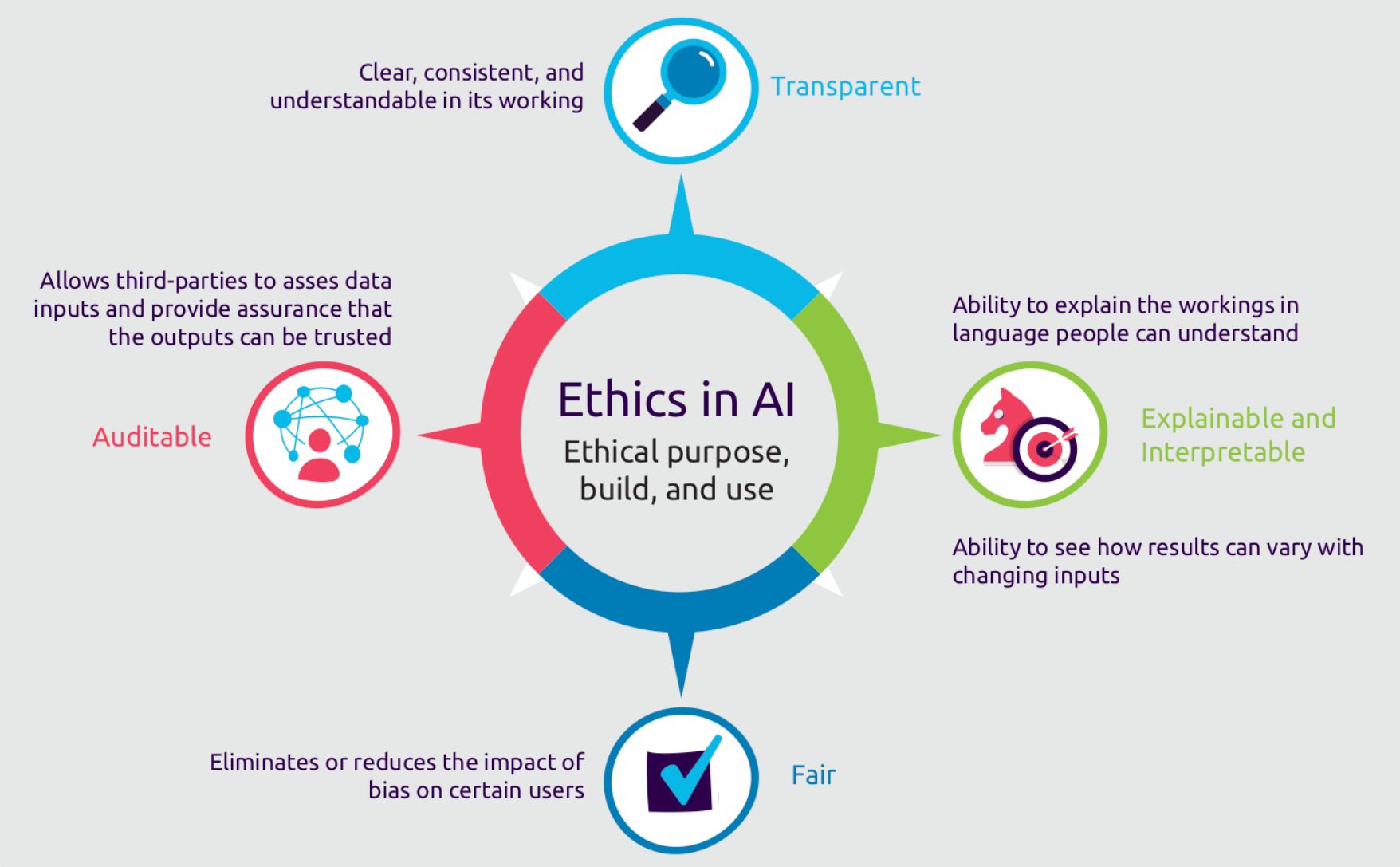

The controversy surrounding AI’s future role in society ranges from fears of it becoming uncontrollable to advocating its integration for efficiency gains. AI’s capacity to process data like humans, advancing rapidly through Machine Learning (ML), brings both potential benefits and dangers. Establishing ethical guidelines is crucial to balance AI’s potential harms and benefits, focusing on enhancing society, ensuring fairness, privacy, security, reliability, and transparency.

A poignant example is the Optum algorithm used in healthcare, which unintentionally discriminated against Black patients due to historical bias in data. This highlights the necessity of an AI ethical risk program at the organizational level, involving ethicists, lawyers, business strategists, technologists, and bias scouts to systematically address ethical risks.

How AI can be Biased?

Bias in Artificial Intelligence (AI) arises from the human tendency to form unconscious associations, which can inadvertently seep into AI models, leading to skewed outputs. This bias manifests at various stages of the AI development process:

Data Collection: Bias often originates when the data collected is not diverse or representative of the reality the AI is meant to model. For example, if training data includes only male employees, it will not accurately predict female employees’ performance.

Data Labeling: Biases can be introduced if annotators interpret labels differently.

Model Training: A critical phase where lack of balance in training data or inappropriate model architecture can lead to biased outcomes.

Deployment: Bias can emerge if the system isn’t tested with diverse inputs or monitored post-deployment.

Both implicit (unconscious attitudes) and explicit (conscious prejudices) biases contribute to these issues. Implicit biases, often absorbed from dominant social messages, are particularly insidious as they can influence behaviors and decisions subconsciously.

AI biases can reinforce harmful stereotypes and exacerbate social injustices, as seen in cases where facial recognition systems misidentify people of color or language models perpetuate gender stereotypes. This necessitates a vigilant approach to detecting and rectifying biases in AI, ensuring fairness in data-driven decision-making.

As AI’s influence grows across sectors, it’s crucial to understand that biases in AI are not merely technical issues but also ethical concerns that require a multidisciplinary approach, including inputs from ethicists, social scientists, and technologists, to ensure responsible AI deployment.

Is AI More Biased than Humans?

Why are People Worried About AI?

Public apprehension about Artificial Intelligence (AI) is increasing, overshadowing the enthusiasm for its potential benefits. According to a Pew Research Center survey, a majority of Americans express more concern than excitement about AI in daily life. This shift from 37% in 2021 to 52% reflects growing awareness and unease about AI’s implications. Concerns vary across AI applications, with mixed feelings about its use in public safety by police and medical care by doctors. However, there is significant anxiety about AI’s impact on personal data privacy, aligning with calls for robust data protection laws.

Underlying these concerns are fears that AI systems may reinforce existing societal biases, such as racism, sexism, and discrimination against marginalized groups. Although AI can also uncover and prevent these biases, its potential misuse raises alarm. Many feel a lack of agency in shaping AI’s trajectory, a sentiment echoed by former US Secretary of State Condoleezza Rice regarding global perspectives on AI.

To address these worries, it’s crucial to scrutinize the motives behind AI narratives and ensure a society-wide conversation about AI’s influence. Learning from history, regulation can be effective without stifling innovation. Engaging with AI responsibly, understanding its role in our lives, and demanding accountability are steps towards mitigating AI-induced anxieties, termed “AI-nxiety.” Responsible development of AI involves understanding its non-sentient nature, ensuring ethical data usage, and promoting AI as an aid rather than a replacement for human capabilities.